AWS is enabling a responsible use of AI through its many solutions. (Source – Shutterstock)

How AWS is making generative AI simpler for businesses

- Amazon commits to continued collaboration with the White House, policymakers, technology organizations, and the AI community to advance the responsible and secure use of AI.

- Some of its solutions promoting fair and responsible use of AI include AWS CodeWhisperer and Amazon’s Titan family of foundation models.

Generative AI continues to make headlines around the world. Big tech companies are ensuring they remain part of the conversation when businesses invest in the emerging technology. For AWS, AI represents a significant opportunity for businesses to not only improve their productivity and efficiency but also develop new use cases with the technology.

In fact, generative AI is expected to contribute to the significant growth in the global GDP of up to US$7 trillion over the next 10 years. As such, AWS is investing US$100 million into the recently announced Generative AI Innovation Center, a program to help customers build and deploy Generative AI solutions.

“We believe that AI will help to drive economic growth, productivity, and operational efficiency. I think we’re just getting started by giving everyone access to these capabilities, and we’ll see more participation from industries and companies that we haven’t even heard about yet. AI is not a revolution; it’s more of an evolution to become better at doing things,” commented Olivier Klein, AWS’s Chief Technologist in Asia Pacific.

At the same time, the tech giant is also aware of the challenges that come with using generative AI. The biggest right now is having the right skills to work with it. In Southeast Asia, whereby generative AI adoption is picking up the pace, AWS has committed to making AI and machine learning skills training and education more accessible through programs, scholarships, and grants such as the AWS Machine Learning Learning Plan, AWS DeepRacer, and AWS Cloud Quest: Machine Learning Specialist, Machine Learning University.

Olivier Klein, AWS’s Chief Technologist in Asia Pacific.

While all this is fascinating, there are still some concerning issues that need to be addressed. Namely, the privacy and security issues that are brought about by the increasing adoption of AI at work. Globally, most businesses are concerned about how generative AI is sourcing its content. For example, developers using generative AI for coding would prefer to know the origin of the code.

Is the code proprietary? Will they face legal issues for using the code? How secure is it to share data with generative AI? Should businesses be concerned about biases in their algorithms? These are just some of the many questions developers and businesses have when working with generative AI.

“Responsible use of AI is an ongoing effort and we at Amazon are working with the industry to look at how we can design these responsible foundation models. We’re also looking into how we can avoid bias in the language model,” explained Klein.

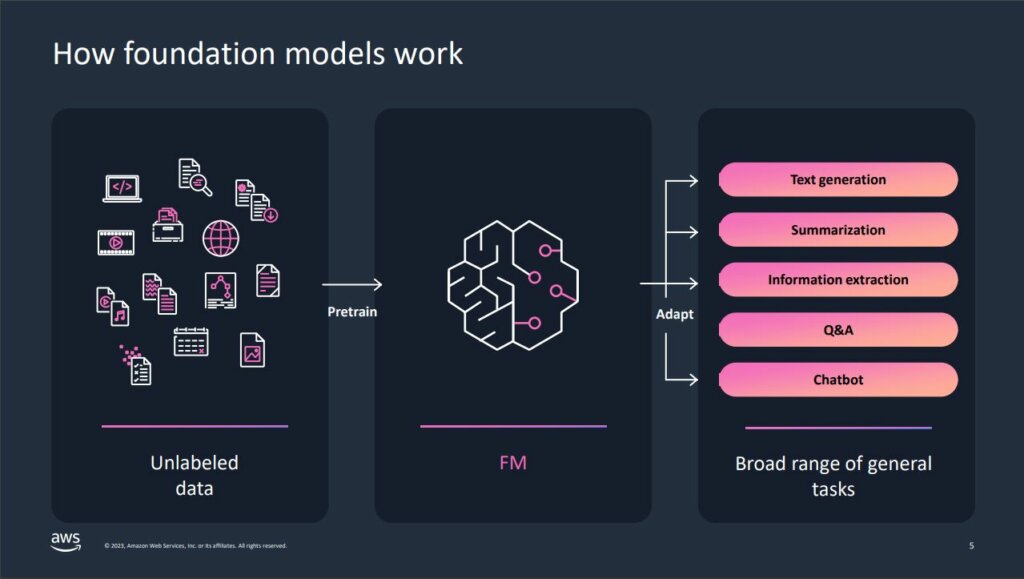

The foundation models for AI

Foundation models are a type of model trained on large datasets without explicit instructions to generate outputs in the form of text, images, and videos, among others. Foundation models can perform more tasks because they contain such a large number of parameters that make them capable of learning complex concepts. Through their pre-training exposure to internet-scale data in all its various forms and myriad of patterns, foundation models learn to apply their knowledge within a wide range of contexts.

“While the capabilities and resulting possibilities of a pre-trained foundation model is amazing, customers get really excited because these generally capable models can also be customized to perform domain-specific functions that are differentiating to their businesses, using only a small fraction of the data and compute required to train a model from scratch. The customized foundation models can create a unique customer experience, embodying the company’s voice, style, and services across a wide variety of consumer industries, like banking, travel, and healthcare,” explained AWS’s Swami Sivasubramanian in a blog post.

Klein also pointed out that new architectures are expected to arise in the future with the diversity of foundation models setting off a wave of innovation and creativity in terms of content, i.e., conversations, stories, images, videos, and music.

How foundation models work. (Source – AWS)

Realizing the concerns of businesses, AWS has been working to democratize machine learning and AI for over two decades with the aim to make it accessible to anyone who wants to use it. Today, more than 100,000 customers are using dozens of AWS AI and ML services offered in the cloud. AWS has also invested in building a reliable and flexible infrastructure for cost-effective machine learning training and inference. This includes the development of Amazon SageMaker, which aims to simplify the process for developers to build, train, and deploy models.

Moreover, AWS launched a wide range of services that allow customers to add AI capabilities like image recognition, forecasting, and intelligent search to applications with a simple API call. They include:

- Amazon Bedrock – a fully managed service that makes foundation models from leading AI startups and Amazon available via an API, so businesses can choose from a wide range of FMs to find the model that’s best suited for their use case.

- Amazon EC2 Trn1 – purpose-built for high-performance deep learning training of generative AI models, including large language models (LLMs) and latent diffusion models.

- Amazon EC2 Inf2 – purpose-built for deep learning inference. They deliver high performance at the lowest cost in Amazon EC2 for generative AI models, including LLMs and vision transformers.

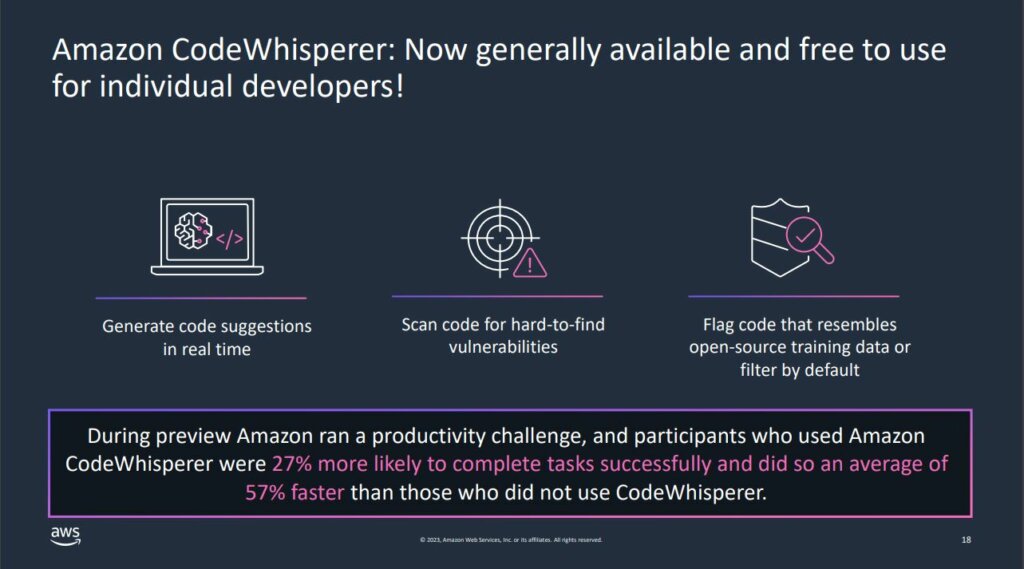

- Amazon CodeWhisperer – trained on billions of lines of code and can generate code suggestions ranging from snippets to full functions in real time based on comments and existing code. It also bypasses time-consuming coding tasks and accelerates building with unfamiliar APIs. In terms of productivity, Amazon CodeWhisperer aims to achieve faster task completion by 57%.

How developers can benefit from AWS CodeWhisperer. (Source – AWS)

Responsible generative AI

What’s interesting about Amazon Bedrock is that it offers the ability to access a range of powerful foundation models for text and images—including Amazon Titan FMs—through a scalable, reliable, and secure AWS-managed service. Amazon Titan FMs are pre-trained on large datasets, making them powerful, general-purpose models. Businesses can use them as is or privately to customize them with their own data for a particular task without annotating large volumes of data.

Titan FMs are built to detect and remove harmful content in the data, reject inappropriate content in the user input, and filter model outputs that contain inappropriate content (such as hate speech, profanity, and violence).

“Developers aren’t truly going to be more productive if code suggested by their generative AI tool contains hidden security vulnerabilities or fails to handle open source responsibly. CodeWhisperer is the only AI coding companion with built-in security scanning (powered by automated reasoning) for finding and suggesting remediations for hard-to-detect vulnerabilities, such as those in the top ten Open Worldwide Application Security Project (OWASP), those that don’t meet crypto library best practices, and others.

To help developers code responsibly, CodeWhisperer filters out code suggestions that might be considered biased or unfair, and CodeWhisperer is the only coding companion that can filter and flag code suggestions that resemble open source code that customers may want to reference or license for use,” explained Sivasubramanian.

Another interested view of CodeWhisperer is that some developers are seeing it as a better alternative to its competitor, Copilot. While Copilot is designed as a more general AI assisted development tool, CodeWhisperer is more focused towards use cases within AWS platforms.

Developers have their own views towards generative AI for coding.

AWS and AI in Malaysia

During the media briefing with Klein, he also highlighted how Malaysian businesses have been adopting tech with AWS. In Malaysia, AWS continues to help businesses in their digital journey. The company has already announced plans for a cloud region in the country. AWS also committed to investing US$6 billion in Malaysia in the next 14 years to keep up with the rapid adoption of cloud services while meeting the data residency requirements of local customers.

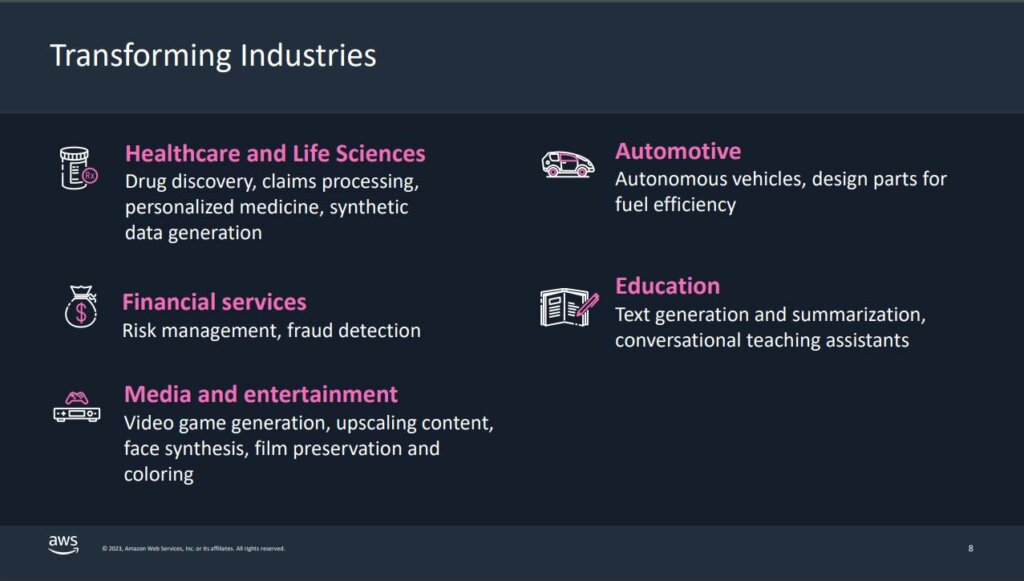

Generative AI is transforming industries. (Source – AWS)

Here’s a list of some of the Malaysian companies and government agencies that AWS has been working with.

- The Department of Polytechnic and Community College Education (DPCCE), a department within Malaysia’s Ministry of Higher Education (MOHE) moved its Learning Management System (LMS) to AWS Cloud in 2019. Using AI/ML, DPCCE is also building an intelligent behavioral profiling and results prediction solution, which helps to detect challenges in students’ learning process for early intervention, to further enhance the learning experience. DPCCE now has a fully managed system to run and scale their workloads, services, and applications securely, 24/7.

- Pos Malaysia Berhad moved its IT infrastructure to AWS to transform its traditional mail and parcel delivery operations in December 2022. They use Amazon SageMaker, a service for creating, training, and deploying machine-learning models to forecast delivery demands and allocate resources like vehicles and personnel to meet them. Pos Malaysia is now able to reduce the delivery time down to a three-hour window, enhancing the end-customer experience.

- SLA Digital worked with Amazon Web Services (AWS) to boost its fraud prevention capabilities with Amazon Fraud Detector, a fully managed service that uses machine learning to identify potentially fraudulent activity. It helped reduce the development time for each fraud detection model from months to weeks. With this agility, SLA Digital reduced the number of fraud-related complaints from mobile operator customers significantly within six months of deployment.

- As part of its goal to accelerate revenue growth, Maxis is using AWS’s AI/ML technology to provide high-quality digital services to 10 million customers. With AWS, Maxis was able to grow new revenue streams, improve operational and financial efficiencies, as well as detect and respond to business risks. Maxis is now able to provide an extensive range and reach of its services, by providing a highly personalized customer experience for both consumers and businesses.

- Carsome leveraged Amazon SageMaker, a fully managed service for ML workflows, to deploy a deep learning model to achieve more accurate results during the car inspection process. The automated system reduced the processing time by approximately 29 times, allowing inspectors to be more productive. This solution enabled Carsome to streamline its inspection process, paving the way for further optimization and integration of AI in its business operations.

“Following the AWS region launch announcement in Malaysia, we are committed to helping build the community of technologists and democratize the space of AI and machine learning, including Generative AI. I believe any company, no matter big or small, should have access to these latest and greatest capabilities and I’m excited to see what our customers are building,” said Klein.

READ MORE

- Data Strategies That Dictate Legacy Overhaul Methods for Established Banks

- Securing Data: A Guide to Navigating Australian Privacy Regulations

- Ethical Threads: Transforming Fashion with Trust and Transparency

- Top 5 Drivers Shaping IT Budgets This Financial Year

- Beyond Connectivity: How Wireless Site Surveys Enhance Tomorrow’s Business Network